One of the most useful questions I've used for evaluating expertise during a hiring interview is:

Tell me about a time when you did something you thought was right, and later it turned out to be a mistake.

That kicks off a series of additional questions and followups:

- What was the context?

- Why did you think you were right and how did you advocate for it?

- How and when did you learn you were wrong?

- How did you address it?

- What lessons did you learn from it?

- What needed to be true for you to have been right?

This drip of questions typically takes 10-15 minutes of interviewing time. It's a variation on the "tell me about a time you changed your mind about something" question, but provides very different signals. Importantly, it is not a "tell me about a mistake you've made" question, which looks similar on paper but misses the point. And that point is: when did a candidate do something that at the time seemed right enough to them and to others, and only in hindsight was revealed to be the wrong approach in some critical way?

Here's what it does, why it works, and the signals it unpacks:

1. It interrupts interviewing autopilot

It's an uncommon framing that breaks common interviewing patterns, forcing people to think in real time — something AI and memorized answers struggle to fake. I sometimes open the interview with this question to set the tone for an authentic conversation and get someone off of performance mode.

2. It checks for introspection and situational awareness

Introspection and situational awareness are critical pieces of expertise: thinking about yourself, understanding your behaviors, putting them in context, and changing based on what you learn. This question pokes at that mechanism; everyone's been wrong before, but not everyone reflects on it or adapts their behavior in response.

Furthermore, a breadth of lived experience suggests some accumulation of mistakes, errors, and general wrongness along the way. All things considered, it's extremely unlikely you're interviewing someone who is perfect (possible but not probable). This is a good thing! Mistakes and errors are an inevitable part of growth, and these questions aim to uncover examples (and awareness) of such growth. Probing into what a person did after they learned they were wrong helps you understand their ability to react to new information that might be different from what they already had in mind — a good signal into their decision-making and information-seeking behaviors.

3. It identifies level-appropriateness thinking

The magnitude of the mistake should match the seniority of the role. A senior architect who's never made anything worse than a syntax error either hasn't been given senior-level responsibilities, lacks good feedback systems, or lacks the self-awareness to recognize their strategic missteps. Conversely, a junior developer who talks about betting the company on the wrong database is either inflating their actual influence or has been working in an extremely immature company. The sweet spot is when candidates describe mistakes that match their claimed level of responsibility — senior folks should have examples involving architecture, strategy, or team direction. Their examples should show they understand the weight of irreversible decisions and have lived with the long-term consequences of their choices. If they haven't, they're probably not as senior as they claim.

4. It evaluates potential vs. actual bounds of expertise

The framing of the question seeks out an interesting situation: the candidate was allowed to take on some work, but ended up being wrong in some way about it. This situation describes the upper bound of a candidate's expertise at some problem set at a point in time.

That they were allowed (or assigned) a certain level of work means they had organizational trust to take it on (most people assign work to meet a person's level). That they did not meet that goal in some critical way means the work had some elements beyond the candidate's capabilities at the time. That's their local upper bound at the moment, the level between their perceived expertise and their actual expertise. The difference between the two shows how close (or far) they are from closing that gap.

Of course, not every example sends this signal. A strong followup to probe whether it's a true signal is: "Was this kind of decision/project representative of the work you were doing at the time?"

5. It also describes the candidate's operating environment

When interviewing engineers, the error they describe making is a good baseline for the level of autonomy and trust they had in making decisions. It's the difference between "my error was a bug" vs. "I prototyped a production system on Google Apps Script and was stuck maintaining the prototype for a year" vs. "I chose the wrong language for the project."

How such errors were caught and corrected says a lot about the support systems around them — whether they learned early through mentorship or only after consequences. It's the difference between "… and my manager caught it in review and told me why this was wrong and explained the right way of doing it" vs. "… and we shipped that code and six months later we had to rewrite the whole damn thing because it became unmaintainable under pressure." That typically gives me a range of whether the candidate is a generalist who can do a lot on their own or someone who really thrives on a team with specialization and well-defined roles/responsibilities.

6. It explores comfort with learning

At Amazon, there's a leadership principle that goes:

Leaders are right a lot. They have strong judgment and good instincts. They seek diverse perspectives and work to disconfirm their beliefs.

In building a high-learning team, we flipped this on its head: team members are allowed to be wrong, a lot. But they're wrong in constantly new ways. They test boundaries, push their capabilities, and experiment. The only "sin" in a learning environment is repeating the same mistakes over and over.

Given that growth comes from working at the edges of your expertise, my corollary is a belief that you learn more from mistakes than successes. Our team's operating philosophy was to lean into mistakes as learning opportunities and good signals to check our understanding and assumptions around a problem. So having someone comfortable with airing out embarrassing details and thinking critically about them was a good cultural signal.

7. It pokes at confidence and ambition

It's critical to understand if candidates will speak up and be willing to be wrong in public ways — their confidence in their expertise and standing among peers. How candidates advocated for their (wrong) ideas shows their willingness to speak up and defend what they thought was right — critical for healthy technical discussions. It's a reliable signal for both personal confidence (speaking up) and technical confidence (details of their solution). Someone's level of confidence to put themselves out there is generally a good sign of someone's tolerance for risk and potential for growth.

However, you don't want to bias your interviewing for overconfidence. Double-click into why someone thought they were right and why they were willing to defend that position. The useful followup around this is: Why did you believe something? What path of analysis or thinking got you to that place, and got you to defend that position?

As a corollary to unpacking a candidate's operating environment, a good followup is: "Was it normal for suggestions on how to solve a problem to come from the team or was there something unique in this situation?"

8. It shows how someone generalizes information

I care about what they take away from the error. Some candidates learn great lessons; others have takeaways I would have facepalmed myself over, had I not been on camera. Do they view mistakes as valuable learning opportunities or as failures to be minimized? Do they generalize a lesson or walk away with a narrow, situation-specific takeaway?

The prompt for "bigger mistakes" usually has a stronger correlation to more interesting lessons. A memorable exchange with one candidate was about how a technical solution that worked for them at one company failed when they applied it to another company — all sorts of healthy discussion around that!

Following up with "... and what might have needed to be true for you to be right?" probes someone's openness to change and where they place responsibility. It's the difference between "other people should have been different" or "I should have known to check for X details first."

9. It reveals individual contribution (not team achievements)

One of the most critical signals this question uncovers is the difference between individual expertise and organizational expertise. Many candidates unconsciously slip into "we" language when describing their work: "We decided to implement microservices," or "We realized the approach wasn't working."

This matters because you're hiring an individual, not their previous team. When you hear "we," always follow up with clarifying questions: "To clarify, you personally made that decision?" or "What was your specific role in realizing the approach wasn't working?"

The best candidates can clearly articulate their personal contributions while still acknowledging team dynamics. They'll say things like "I advocated for the approach, and convinced the team because..." or "The team was split, but I pushed for X because Y." This precision reveals both their actual expertise and their self-awareness about their role in group decisions.

Watch out for candidates who can't differentiate their contributions from their team's. If pressed for specifics, they either deflect ("It was really a team effort") or may claim credit unconvincingly ("Yes, I did all of that"). Both responses suggest either a lack of individual impact or a lack of honesty — neither of which you want. And if you're not confident in their answer, continue to push into the details; an intricate understanding of the details – and the ability to navigate them – is the lifeblood of expertise.

Will It Work For You?

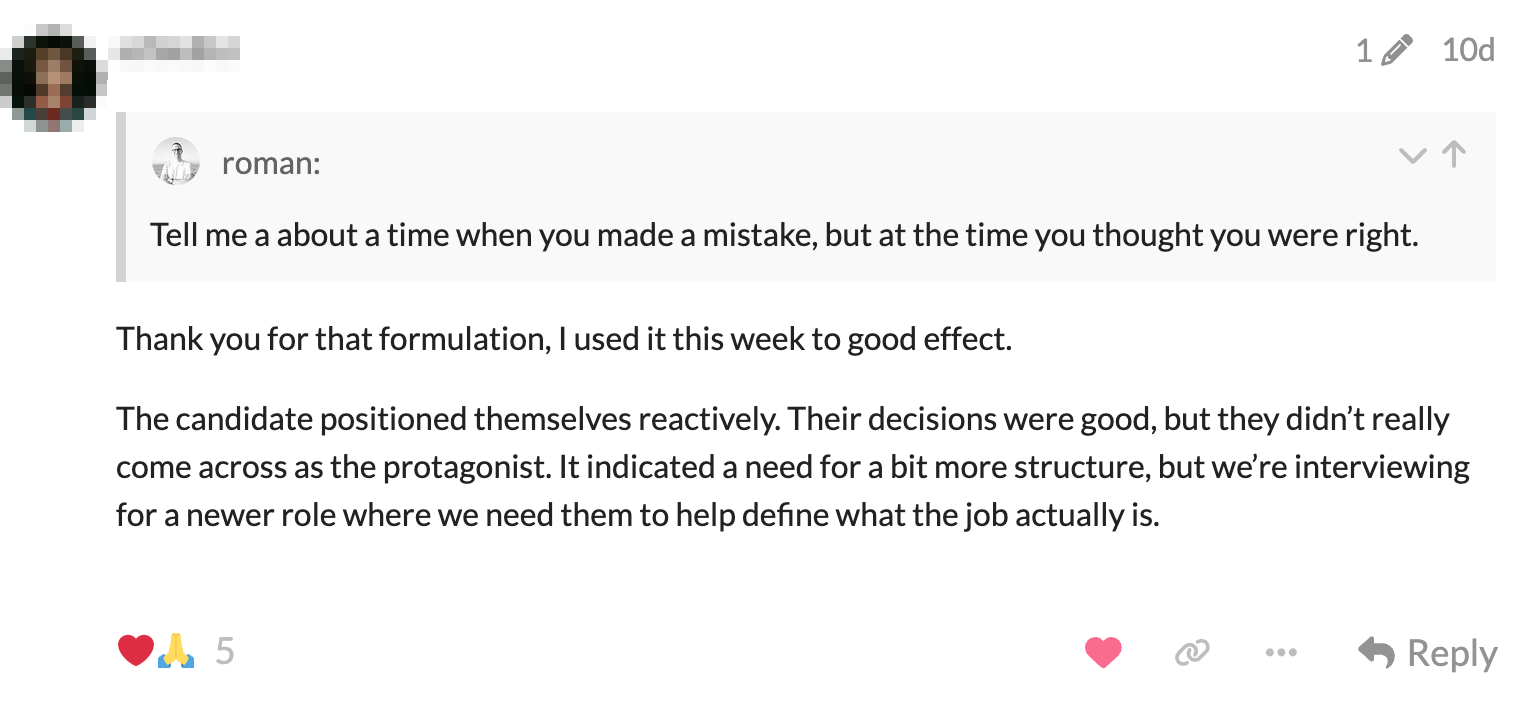

Before writing this post, I shared an abridged version in a forum of colleagues. Within days, folks began to share stories about what they were able to identify in candidate conversations that wasn't obvious before. Here's an example:

This technique helped identify not just technical competence but also how candidates operate within organizations—whether they drive initiatives or primarily respond to direction, whether they learn from mistakes or repeat them, and whether they can adapt to your specific context.

Closing Thoughts

To implement this in your own hiring process:

- Make the time, set the tone

Introduce the question early in the interview to set an authentic tone. Make sure you can spend time digging into the details and pulling on the various threads that come up. - Listen actively, follow up

Listen for signals beyond the technical details of the mistake. Don't make assumptions about the candidate, ask followup questions. - Double down on specificity

Seek out individual experience. Avoid hypotheticals or generic answers. Seek out the context around the answers. - Compare patterns across candidates for the same role.

Remember, the goal isn't to judge candidates for making mistakes — it's to understand how they process, learn from, and adapt after those mistakes. That capacity for growth and self-correction is often a stronger predictor of success than any perfect track record. And by leading with vulnerability (asking about mistakes), you create permission for honesty. It doesn't just reveal how candidates think — it changes how they engage.

The best interviews with this question don't feel like interviews at all. They feel like two people working a problem together.

And isn't that exactly what you're trying to understand?

Appendix:

Interesting in continuing to learn more about teasing signals of expertise from hiring interviews? This post has an appendix with additional techniques and information:

Thanks for reading

Useful? Interesting? Have something to add? Shoot me a note at roman@sharedphysics.com. I love getting email and chatting with readers.

You can also sign up for irregular emails and RSS updates when I post something new.

Who am I?

I'm Roman Kudryashov -- I help healthcare companies solve challenging problems through software development and process design. My longer background is here and I keep track of some of my side projects here.

Stay true,

Roman