I've recently found myself explaining what software development is to people who are on the periphery of technical work but don’t know how to approach it.

Some of these folks are aspiring software developers. Others are product managers trying find ways to work better with their technical counterparts. Others are business leaders trying to make sense of the engineering department and why headcount seems to grow so fast.

This is my reusable summary of what goes into “building and running software on the web” for people who aren’t software developers, based on those chats, emails, and conversations.

The “Work” of Building & Running Software

Software development can be split into six areas of activity:

- UIs: Building user interfaces

- Algorithms: Transforming data & logical operations

- Data Management: Moving, storing, and retrieving data

- Quality: Troubleshooting and testing

- Operations: DevOps, managing environments and infrastructure

- Technical Debt: Refactoring and performance enhancement

- (And if you’re inclined: planning, estimating, scoping, and architecting are a seventh activity)

The UI Work: Building User Interfaces

The user interface (or UI) is the part of the program that most people interact with. This includes everything from accessing a web page, loading an app, clicking through a slideshow, playing a game, interacting with VR, and a whole lot of other stuff. If you interact with it, it’s a user interface!

UIs come in many different forms. The ones most people are familiar with are Graphical User Interfaces , or GUIs. You can also have text-based interfaces (such as for a chatbot/ChatGPT), voice interfaces (such as Alexa or Siri), virtual reality interfaces, and command-line interfaces (CLIs) for interacting more directly with the computer itself. You can also have physical interfaces (buttons, switches) but those move into the realm of hardware engineering.

The goal of a UI is to let you interact with the program. A UI allows you to do something, captures that input, and then returns something to you.

That said, a UI is different from the UX, or User Experience. The UI is strictly about the interface, while the UX is a more holistic view of a user’s experience, perception, and goals. It is kind of like the difference between tactics (how you end up doing it) and strategy (what you are trying to do). Software development can sometimes conflate the two, but the practice of UX can encompass activities beyond software development, including communications and copywriting, growth, marketing, graphic design, service design, and education.

UIs are the most prominent and visible parts of a program, for obvious reasons. When most people think of software, they think of the software’s UI… and not what’s under the hood.

The Algorithms Work: Transforming Data & Logical Operations

UIs are the facade that hides software's real work, which is to say the running of algorithms: rules for data transformation and logical operations. This is a fancy way of saying that “turning inputs into outputs”.

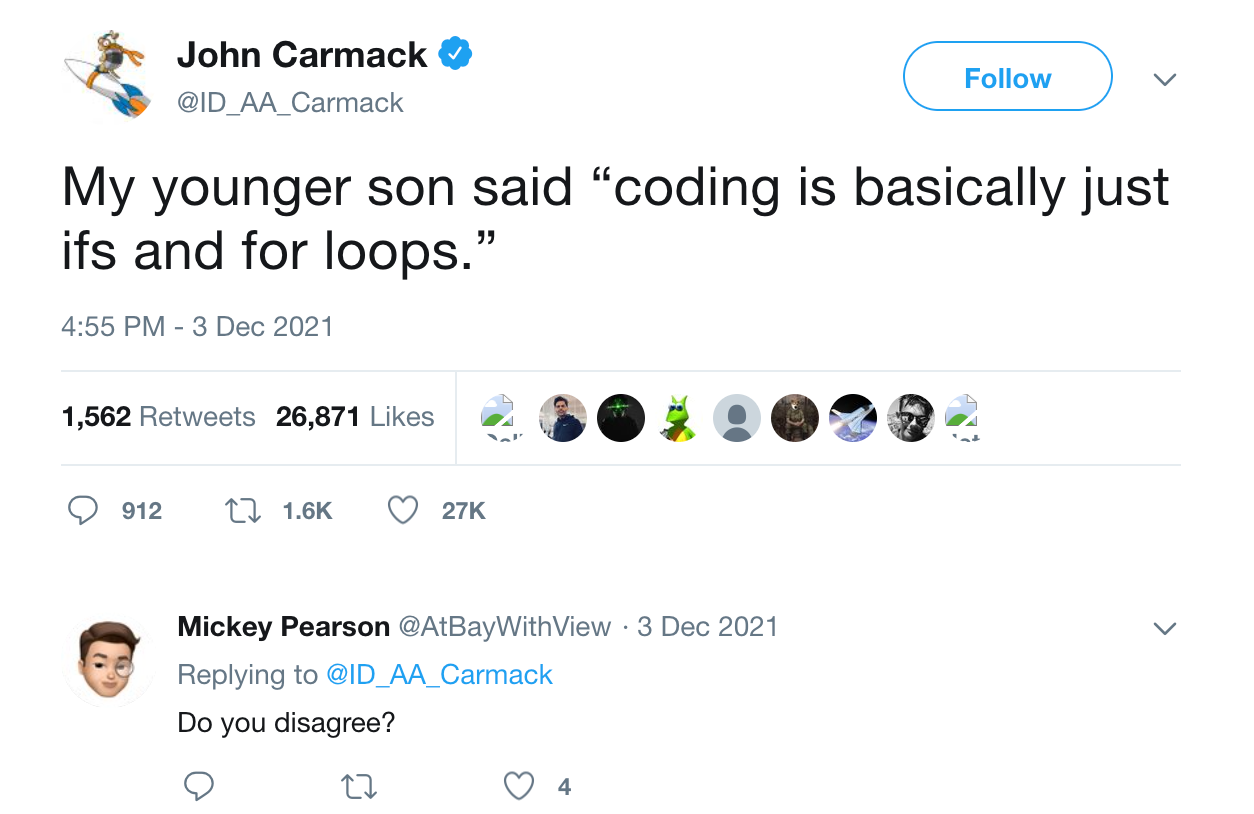

At the most basic level, an algorithm is a set of instructions. For example, a recipe is an algorithm. When a developer writes code, they’re writing a lot of different instructions for the computer to follow.

Some of these algorithms are simple, such as addition and subtraction. Some algorithms are very well known, such as Binary Search or Quicksort. Other algorithms are extremely complicated, sets of instructions upon instructions, loops upon loops of branching if/then/for/when statements. Many algorithms come prewritten into the tools (the programming languages and frameworks) a software developer is using and can be called upon as a named “function”, and some of those tools can be better and worse for solving the programming challenges on hand.

When we talk about algorithms in software development, most of the time it is either to transform data (i.e., 2+2=4 … or 2+2=22 if you want), or to perform a logical operation (such as if/then, or, and, for, when, etc). Here’s a pseudocode example:

if form_data="lettuce" {

select field "color"

update text="green"

}

else if form_data="radish" {

select field "color"

update text="red"

}

When you’re writing code, you can give it a name and then reference it again later. For example, if I write

define function foodColors() {

(insert psuedocode from above)

};

… then I can reuse the algorithm/function foodColors() by writing just the name instead of the entire block of code.

Algorithms, data transformations, and logical operations are what the software does. In the same way that the UI is the piece that most people think is the software, the algorithms are the software-y part of the software.

The Data Work: Moving, Storing, and Retrieving Data

While you can write software that starts up, does one thing, and then ends, most software is designed to work over an unspecified period of time. You need to be able to move, store, and retrieve data to allow this to happen.

Data can be stored in many different ways. You can store it in the computer’s temporary memory or cache, in a file (such as a text or a .CSV – comma separated values – file), or in a database.

Once you store the data, you need to also to retrieve it… which is a little bit harder. You need to build a query for this, which is a combination of filters, sorts, limits, and identifiers that get you the exact thing you’re looking for. If you don’t properly label and organize your data (such as with unique IDs per row), retrieving it can be a mess!

So organizing and labeling data is one of the most important factors in storage and retrieval. Data qualifiers and metadata like the aforementioned unique IDs are one step of the puzzle. The other is deciding if you make the data hard to read (for analytics), or hard to write (for running the software).

“Hard to read” is essential when you ask a developer, “Hey can you pull a spreadsheet of people’s contact information and services they had recently” and they give you a convoluted answer about how the data is split across many different tables and it will take a week to get you that report. It’s hard to “read” the data because the data was organized in a way that makes sense for the application and its performance, but not in a way that makes it easy to read for a layperson.

Here’s another example: let's say you’re making a task management tool. As a developer, it would make sense to have one table store all tasks, a different table to store all users, another to store all comments, another for a list of all projects. It makes sense to separate them out because they might have different relationships; one task might be associated with different projects, some comments might not be visible to some users, and so on. Now if an analyst comes knocking and asks for a report on how many comments a user made on a certain project, the pain is that you have to join and filter a lot of different tables to answer the question. There's no one pre-existing table that shows you those relationships, because those relationships are generated on demand when the application needs them.

“Hard to write” is the opposite: it means organizing information in a way that makes sense for an analyst’s report, but creates application inefficiencies and difficulties. Because of this, developers naturally gravitate towards the “hard to read” approach.

Of course, hard to read/write aren't mutually exclusive approaches. For example, a software developer can design a data “object” by pre-linking data sets with different primary/foreign keys to make it easier to pull together.

When it comes to where the data is stored, as with algorithms, different databases are optimized for different use cases. You might have databases designed like a spreadsheet (and read using a "structured query language", or SQL), or like documents with data nested inside other data (NoSQL)… or they might be graph/vector based datastores (I won't get into that). Even databases that look similar (say spreadsheet-style SQL databases) can be optimized for different problems, such as for columnar growth (adding more rows takes up very little room) or for tabular growth (adding more columns takes up very little room).

The Quality Work: Troubleshooting, Fixing Bugs, Testing

There’s an oft-quoted saying that “software development is 10% writing code and 90% fixing bugs”. Unless you're a technical savant, this holds true for most people.

This is because algorithms are extremely logical and precise, in the sense that you can’t hand-wave away the details. Let’s say that you’re writing instructions for making a sandwich. If you’re a human, you can deal with a lot of ambiguity:

- Get bread

- Spread peanut butter on it

- Spread jelly on it

- Put more bread on top

- Eat it

If you’re a program, you’ll end up running into a lot of different errors along the way. Here’s the same set of instructions, but interpreted by a program:

- Get bread… (What kind of bread? How much?)

- Spread peanut butter on it (Where did this peanut butter come from? What are you using to spread it? How much? Do you keep the peanut butter out or put it back?)

… at this point, most programs would throw an error of some sort, such as:

- Undefined Error: “Bread” and “Peanut Butter” undefined

- Order of Operations Error: No peanut butter found. Did you mean to open fridge first?

- Operational Error: Tried to spread peanut butter but lid was closed

- Overflow Error: Spread peanut butter all over your table because you didn’t define how much

... and so on. As Carl Sagan once quipped, 'If you wish to make an apple pie from scratch, you must first invent the universe'. Writing software isn't too different. Some programming languages are opinionated and might make a decision for you, some are not and might crash.

And that's before getting to user-errors. So after you write your initial set of instructions (the 10% of the work), you’re left with all of the edge cases, exceptions, bugs, and scenarios that you did not account for (the 90% of the work). This is why many developers hate hand-wavey requirements such as “just make this thing”. There are always a lot of assumptions built into that “thing” which a user is too busy to think about and describe, but the developer can’t avoid. This is why most developers will ask a lot of “what about if…” scenarios and provide very specific caveats to what they say.

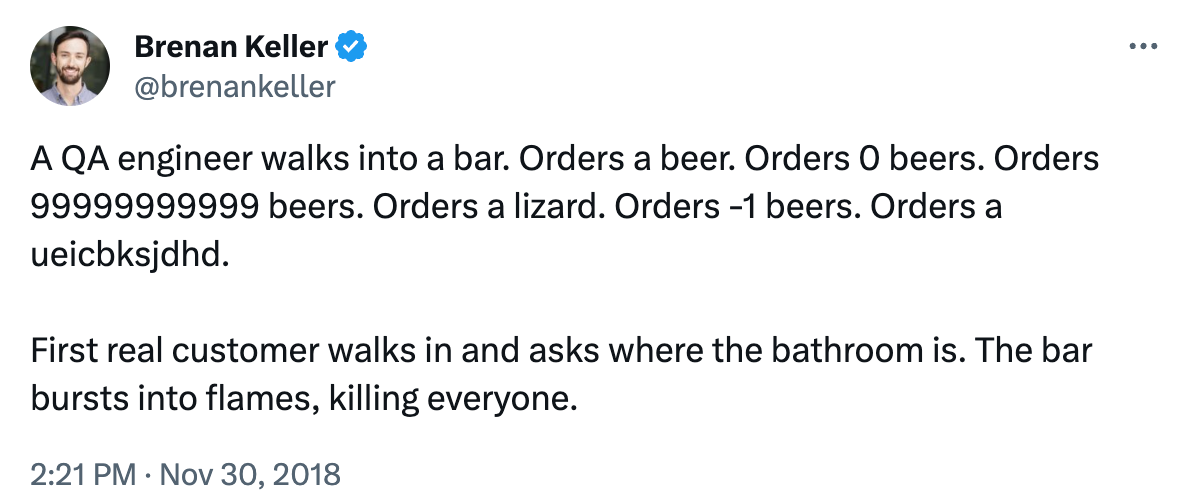

So the way this plays out for most developers is that they write the initial instructions, run a program, find the bugs that crash the program, work through those bugs, run the program again and find the bugs that create unintended consequences but don’t crash the program, fix those, run the program again and find the bugs that result in not meeting the user requirements, fix those, run it again and find security holes, fix those, and keep this going in a very long loop until either the programmer has figured out how to navigate every edge case/input, meets all the requirements but ignores the improbable bugs, or gets bored and hands off the code to someone else to test (QA, the product manager, or the end user) and buys themselves some time before someone finds yet another scenario that doesn’t work.

Testing has its own variations as well. You can be doing unit testing (is a function working?), integration testing (does the software still work with the new code that was added?), or user acceptance testing (UAT, which is about evaluating if the requirements have been met). Some of this testing can be automated (unit testing), some manual (UAT), and some somewhere in between (end to end systems integration testing).

More philosophically, there are many perspectives on what software 'quality' is and who should be responsible for it… is it on the developer, writing the code? Is it a dedicated quality assurance (QA) tester, or should developers be testing their own code? Is it on the product managers, when writing requirements? Is it on the user, who signed terms and conditions to use the software in a specific way (and why are they trying to enter paragraphs of text into a number field anyway)?

My perspective is that there are two types of “quality”: the software’s ability to meet user expectations and solve problems, and the reliability of the software to run repeatedly without errors.

The Operations Work: DevOps, Managing Infrastructure, and Environments

Making software isn't the only part of the work. Software developers also need to make sure that the software runs somewhere, preferably safely accessible by the users. While software can run exclusively on a single person's computer, most of today's software runs on the cloud, often across many different systems concurrently.

Running software on the web requires answers many questions about the infrastructure and environments; a developer needs to control or decide on a lot of things:

- What kind of computer are they running it on?

- What kinds of supporting software ("packages" and "dependencies") are they using?

- What versions of the aforementioned dependencies are they using?

- How are they moving the code from their computer to somewhere else?

- How are they handling scaling and volume?

… and so on. This is sometimes referred to as DevOps, or developer operations. If software development is akin to building a house, this DevOps work is akin to figuring out where you’re going to build it, understanding the climate, the regulatory environment, then choosing materials for your house and shipping them to your destination.

DevOps is the answer to when a developer says, “Well it works fine on my computer!” Like I said before, most of today's software is hosted and run in the “cloud”, which is someone else’s very large and distributed collection of computers.

And again – because the specifics matter a lot in software development, even things like different versions of a software dependency (version 3.1.1 vs. version 3.1.2) can make difference in if something works or breaks. Making sure that the work of one engineer is compatible with the work of another means creating controls around the variables in their programming environment, either by creating “virtual” environments (a temporary workspace with predefined parameters that mimic each others’ workspaces) or through “container” environments (similar, but prepackaged to make it easy to share with others).

Setting up an environment and managing the supporting infrastructure are often both the first and last things a developer will do when writing code. It will be the first thing because this is how they get started. It will be the last thing because it is how they make sure that what works on their computer also works on everyone else’s computers.

In between those environmental bookends, there is also the work of moving the code from one place (your computer) to another (the server, or the repository). Code repositories such as GitHub or GitLab are where developers consolidate the work they've been doing (branches or code) into a unified codebase. Code is pushed (deployed to the codebase) or pulled (cloned to your local environment). Code gets merged into the codebase with a commit, and the more people are working on the code base, the more often differences in developer's branches needs to be reconciled before it gets merged.

But that's just the codebase; that codebase can then be moved and combined with the software that's live continuously (as in Continuous Integration/Continuous Deployment or CI/CD), or through preplanned and managed releases. The “quality” of a developer operations program is determined by the speed and reliability with which code can be deployed and integrated with other code… but the success of a developer operations program is dependent on the developers’ investment into testing automation and feature flagging (which is the ability to turn features on and off selectively for different users).

And as with testing, a DevOps program can be programmatic and automated (infrastructure as code), or completely manual through documentation and individuals at the controls.

The Tech Debt Work: Refactoring and Performance Enhancement

After code is written and is delivered to users, something funny happens: the world changes. First it is almost imperceptible. People may use and like the software because it helps them do things. Then they realize that they want to do more things, or different things. Or the business grows and there are new problems to solve.

Code is almost always written incrementally, additively. Software developers don’t rewrite the whole program every time you need to add a new feature… they often just write some more code and add it to the code base. As an engineering team grows, more people end up adding things in. Over time, the size and complexity of the codebase increases.

This often results in weird and unintentional behaviors. In best case scenarios, this is a result of accumulating and overlapping algorithms creating unforeseen behaviors… aka emergent complexity. In the worst case, it may reflect natural human error or the politics/organizational design of a company resulting in different parts of a program behaving in different ways.

And as the size of a program grows (in terms of lines of code), it also becomes harder to manage. In a program that is 100 lines long, it is pretty easy to grok everything that is happening. When a program is 100,000 lines long, composed of 200 different files that reference each other, developed by 15 different people over the last 3 years (and that’s a simple example), things get really hard to keep track of.

Another thing that happens is developers take shortcuts to expedite code and feature delivery. After all, most businesses prefer fast delivery (“Can you do it by the end of the week?”). Such work accumulates future debt in exchange for a payoff today.

All of this results in what’s called tech debt. Every change becomes more difficult to make, the program becomes harder to understand and work with, and it breaks more often, for harder to discern reasons.

Tech debt is addressed by refactoring, which is to go back and clean up yesterday’s code to meet today’s business requirements. This is a matter of rewriting or simplifying code — valuable meta-work for developers, but not an obvious benefit to a business because superficially it’s a lot of efforts to make something that… does nothing new? I mean, have you ever tried to pitch to your CEO that the team needs to pause work on all new features while they clean up, often for a quarter or two? (For the record, I’ve listened to such conversations and they went as ridiculously as you would think).

But not all refactoring is to tackle tech debt. Some refactoring is to create performance improvements. Making things work faster, easier, and more reliably is an important business goal, whether that is faster for the application to do something (such as retrieve data or load a web page) or faster for a developer to create new features (because there is less tech debt, or better commented code).

Ok! That sums up what the “work” of software development for the web looks like (and if you're working on things like LLMs, graphics, or software-hardware interfaces, there are a few other things worth talking about). And it's also not the end of the story. In the future (if I get around to it or if there's demand), I'd like to create a Part 2, looking at some of the specific tools and languages developers use, and why/where; and a Part 3, to unpack the different roles in software development.

Thanks for reading.

Thanks for reading

Was this useful? Interesting? Have something to add? Let me know.

Seriously, I love getting email and hearing from readers. Shoot me a note at hi@romandesign.co and I promise I'll respond.

You can also sign up for email or RSS updates when there's a new post.

Thanks,

Roman